Installing PySpark on Windows: A Step-by-Step Guide

- Harini Mallawaarachchi

- Dec 30, 2023

- 1 min read

Apache Spark is a powerful open-source distributed computing system that provides fast and general-purpose cluster-computing frameworks for big data processing. PySpark, the Python API for Apache Spark, allows developers to harness the capabilities of Spark using Python programming language. While PySpark is commonly used in a Linux environment, installing it on a Windows machine can be a bit challenging. In this blog post, we will walk you through the steps to successfully install PySpark on a Windows system.

Step 1 - Install JDK

PySpark requires Java to run. Download and install the latest JDK from the official Oracle website. Spark runs on Java 8, 11, or 17.

Java Development Kit (JDK): JDK 17

install in the below path

C:/java/jdkTo verify the installed Java version, run the below line in cmd.

java --versionStep 2 - Install Spark

Navigate to the Spark website downloads. Select the latest version.

Download and use Winrar/ 7zip to extract it's content.

Extract into the below path

C:\Users\harin\PySpark-LearnStep 3 - Install Python

Install Python Sparks only runs on Python 3.7+

After installation, activate it and we can see the env name at the beginning of the prompt.

python -m venv .pyspark-env.pyspark-env\Scripts\activate

Step 4 - Install pyspark, JupyterLab

Now install pyspark, findspark, and JupyterLab, the popular notebook interface for Python.

Navigate into the relevant folder path in cmd.

cd C:\Users\harin\PySpark-LearnRun the below commands one by one in cmd in the folder path where you installed Python.

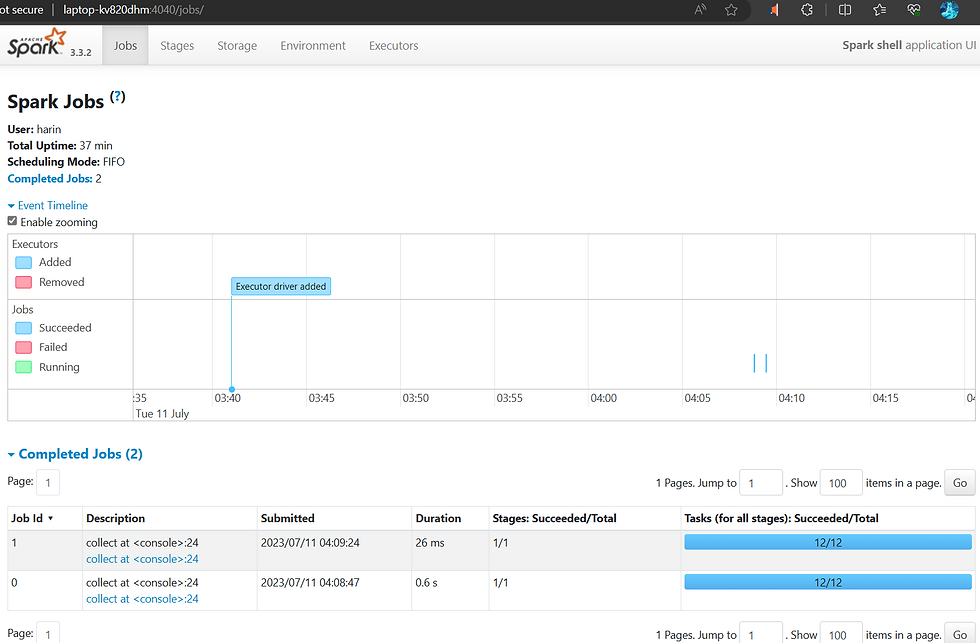

pip install pysparkpip install findsparkpip install jupyterlabStep 6 - Test PySpark Installation

Note: Open Jupiter Lab

Now you've installed all the required software for Pyspark.

Whenever you want to run JupiterLab, simply run the below lines in cmd to open the notebook interface.

cd C:\Users\harin\PySpark-Learnpython -m venv .pyspark-env

.pyspark-env\Scripts\activate

jupyter-labReference: PySpark Tutorial for Beginners - YouTube

🔗 GitHub Repository: https://github.com/coder2j/pyspark-tu...

Comments